AI-as-a-service (AIaaS) provides customers with cloud-based access for integrating and using AI capabilities in their projects or applications without needing to build and maintain their own AI infrastructure. It also offers prebuilt and pretrained models for basic uses like chatbots so the customer doesn’t have to go through the process of training their own.

AI-as-a-service (AIaaS) provides customers with cloud-based access for integrating and using AI capabilities in their projects or applications without needing to build and maintain their own AI infrastructure. It also offers prebuilt and pretrained models for basic uses like chatbots so the customer doesn’t have to go through the process of training their own.“Basically, AI-as-a-service enables you to accelerate your application engineering and delivery of AI technologies in your enterprise,” said Chirag Dekate, vice president and analyst for AI infrastructures and supercomputing at Gartner.

AI-as-a-service offers three entry points: the application level, the model engineering level, and the custom model development level, he said. If you’re a relatively low-maturity enterprise and want to get started in genAI, you can leverage it at the application layer. Or if you want to manage your own models, you can do them deeper down the stack.

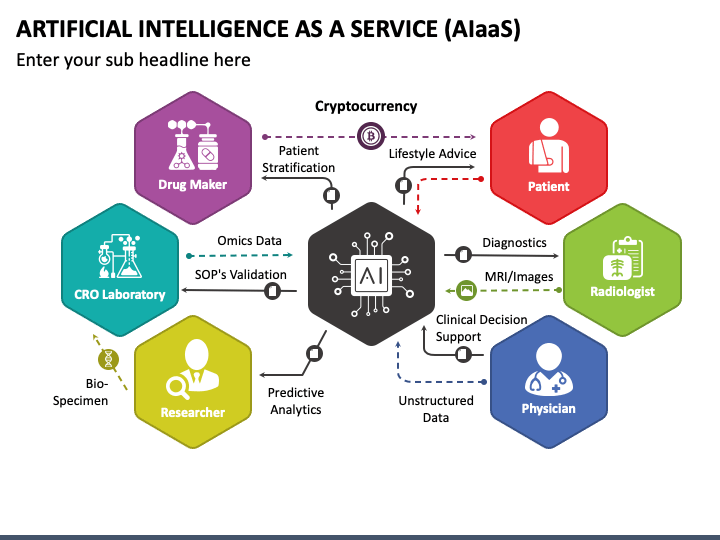

AIaaS providers offer data preparation, since it is often unstructured, as well as the training of models provided by the customer or the option to use pre-built AI models they provide. These models, trained on massive data sets, can perform various tasks like image recognition, data analysis, natural language processing, and predictive analytics.

You access the services through APIs or user interfaces. This allows you to easily integrate the AI functionality into your own applications or platforms, often with minimal programming required.

Most AI-as-a-service providers offer a pay-as-you-go model, either through metered use or a flat rate. It is much pricier than your traditional IaaS/PaaS scenario. Nvidia, for example, charges a flat rate of $37,000 per month to use its DGX Cloud service.

AI-as-a-service is no small effort. While newer players like Nvidia, OpenAI, and even some managed service providers are getting in on the act, the major players in AIaaS today are the giant cloud service providers, since they’re the companies with the financial wherewithal to support AIaaS at enterprise scale.

These offerings include:

I. AWS AI

Amazon Web Services has a rather broad array of AI services, starting with prebuilt, ready-to-use services that can help jumpstart an AI project and get around the need for experienced data scientists and AI developers. These services include Amazon Translate (real-time language translation), Amazon Rekognition (image and video analysis), Amazon Polly (text-to-speech), and Amazon Transcribe (speech-to-text).

Managed infrastructure tools include Amazon SageMaker for building, training, and deploying machine learning (ML) models, Amazon Machine Learning (AML) drag-and-drop tools and templates to simplify building and deploying ML models, Amazon Comprehend natural language processing, Amazon Forecast to provide accurate time series forecasting, and Amazon Personalize with personalized product and content suggestions.

Under generative AI, AWS offers Amazon Lex to build conversational AI bots, Amazon CodeGuru code analysis and recommendations for improved code quality and security, and the Amazon Kendra intelligent search solution.

II. Microsoft Azure AI

Micrsoft’s AI services are geared towards developers and data scientists and are built on Microsoft applications such as SQL Server, Office, and Dynamics. Microsoft has integrated AI across its various business applications in the cloud and on premises.

Microsoft is also a dealmaker, securing partnerships and collaborating with leading AI companies including ChatGPT creator OpenAI. Many AI apps are available in the Azure Marketplace.

The vendor offers a number of prebuilt AI services, such as speech recognition, text analysis, translation, vision processing, and machine learning model deployment. It also has an OpenAI Service with pretrained large language models (LLMs) like GPT-3.5, Codex, and DALL-E 2.

III. Google Cloud AI

Google’s AI service specializes in data analytics, with tools like BigQuery and AI Platform and offering the AutoML service, which features automated model building for users with less coding experience.

Google Cloud AI offers a unified platform called Vertex AI to streamline the AI workflow, simplifying development and deployment. It also offers a wide range of services with prebuilt solutions, custom model training, and generative AI tools.

The AI Workbench is a collaborative environment for data scientists and developers to work on AI projects, with AutoML to automate much of the machine learning workflow and MLOps to manage the ML life cycle more efficiently by ensuring that ML models are developed, tested, and deployed in a consistent and reliable way.

Google Cloud AI has a number of prebuilt AI solutions:

* Dialogflow: A conversational AI platform for building chatbots and virtual assistants.

* Natural Language API: Analyzes text for sentiment, entity extraction, and other tasks.

* Vision AI: Processes images and videos for object detection, scene understanding, and more.

* Translation API: Provides machine translation across various languages.

* Speech-to-Text and Text-to-Speech: Converts between spoken language and text.

Vertex AI Search and Conversation is a suite of tools specifically designed for building generative AI applications like search engines and chatbots, with more than 130 foundational pretrained language models like PaLM and Imagen for advanced text generation and image creation.

Google recently announced its new Gemini model, the successor to its Bard chatbot. Gemini reportedly is capable of far more complex math and science generation, as well as generating advanced code in different programming languages. It comes in three versions: Gemini Nano for smart phones; Gemini Pro, accessible on PCs and running in Google data centers; and Gemini Ultra, still in development but said to be a much higher end version from Pro.

IV. IBM Watsonx

IBM Watson AI-as-a-service, now known as Watsonx, is a comprehensive array of AI tools and services known for its emphasis on automating complex business processes and its industry-specific solutions, particularly in healthcare and finance.

Watsonx.ai Studio is the core of the platform, where you can train, validate, tune, and deploy AI models, including both machine learning models and generative AI models. The Data Lakehouse is a secure and scalable storage system for all your data, both structured and unstructured.

The AI Toolkit is a collection of pre-built tools and connectors that make it easy to integrate AI into your existing workflows. These tools can automate tasks, extract insights from data, and build intelligent applications.

Watsonx also includes a number of pretrained AI models that you can use immediately and without any training required. These models cover tasks such as natural language processing, computer vision, and speech recognition.

V. Oracle Cloud Infrastructure (OCI) AI Services

Oracle up till now has been running well behind the market leaders in cloud services, but it does have several advantages worth considering. Oracle is, after all, a major business application company, from its database to its applications. All of its on-premises applications can be extended to the cloud for a hybrid setup. This makes moving your on-premises data to the cloud for data prep and training easy and straightforward. It’s a similar advantage that Microsoft has with its legacy on-prem applications.

Oracle has very heavily invested in GPU technology, and that is the primary means of data processing in AI at the moment. So if you want to run AI apps on Nvidia technology, Oracle has you covered. And Oracle’s AI services are the most affordable among the cloud service providers, an important consideration given how expensive AI-as-a-service can get.

OCI AI Services presents a diverse portfolio of tools and services to empower businesses with various AI functionalities. Like IBM’s Watsonx, it’s not just one service but a collection of capabilities catering to different needs including fraud detection and prevention, speech recognition, language analysis, document understanding, and more.

Oracle’s generative AI Service supports LLMs like Cohere and Meta’s Llama 2, enabling tasks like writing assistance, text summarization, chatbots, and code generation. Oracle Digital Assistant’s prebuilt chatbot frameworks allow for rapid development and deployment of voice- and text-based conversational interfaces.

The Machine Learning Services offers tools for data scientists to collaboratively build, train, deploy, and manage custom machine learning models. They support popular open-source frameworks like TensorFlow and PyTorch.

Finally, there is OCI Data Science, which provides virtual machines with preconfigured environments for data science tasks, including Jupyter notebooks and access to popular libraries, simplifying your data exploration and model development workflow.

VI. Nvidia

Nvidia's latest and fastest GPU, code-named Blackwell, is here and will underpin the company's AI plans this year. The chip offers performance improvements from its predecessors, including the red-hot H100 and A100 GPUs. Customers demand more AI performance, and the GPUs are primed to succeed with pent up demand for higher performing GPUs.

The GPU can train 1 trillion parameter models, said Ian Buck, vice president of high-performance and hyperscale computing at Nvidia, in a press briefing.

Systems with up to 576 Blackwell GPUs can be paired up to train multi-trillion parameter models.

The GPU has 208 billion transistors and was made using TSMC's 4-nanometer process. That is about 2.5 times more transistors than the predecessor H100 GPU, which is the first clue to significant performance improvements.

AI is a memory-intensive process, and data needs to be temporarily stored in RAM. The GPU has 192GB of HBM3E memory, the same as last year's H200 GPU.

Nvidia is focusing on scaling the number of Blackwell GPUs to take on larger AI jobs. "This will expand AI data center scale beyond 100,000 GPU," Buck said.

The GPU provides "20 petaflops of AI performance on a single GPU," Buck said.

Buck provided fuzzy performance numbers designed to impress, and real-world performance numbers were unavailable. However, it is likely that Nvidia used FP4 – a new data type with Blackwell – to measure performance and reach the 20-petaflop performance number.

The predecessor H100 provided 4 teraflops of performance for the FP8 data type and about 2 petaflops of performance for FP16.

"It delivers four times the training performance of Hopper, 30 times the inference performance overall, and 25 times better energy efficiency," Buck said.

The FP4 data type is for inferencing and will allow for the fastest computing of smaller packages of data and deliver the results back much faster. The result? Faster AI performance but less precision. FP64 and FP32 provide more precision computing but are not designed for AI.

The GPU consists of two dies packaged together. They communicate via an interface called NV-HBI, which transfers information at 10 terabytes per second. Blackwell's 192GB of HBM3E memory is supported by 8 TB/sec of memory bandwidth.

Nvidia has also created systems with Blackwell GPUs and Grace CPUs. First, It created the GB200 superchip, which pairs two Blackwell GPUs to its Grace CPU. Second, the company created a full rack system called the GB200 NVL72 system with liquid cooling—it has 36 GB200 Superchips and 72 GPUs interconnected in a grid format.

The GB200 NVL72 system delivers 720 petaflops of training performance and 1.4 exaflops of inferencing performance. It can support 27-trillion parameter model sizes. The GPUs are interconnected via a new NVLink interconnect, which has a bandwidth of 1.8TB/s.

The GB200 NVL72 will be coming this year to cloud providers that include Google Cloud and Oracle cloud. It will also be available via Microsoft's Azure and AWS.

Nvidia is building an AI supercomputer with AWS called Project Ceiba, which can deliver 400 exaflops of AI performance.

"We've now upgraded it to be Grace-Blackwell, supporting....20,000 GPUs and will now deliver over 400 exaflops of AI," Buck said, adding that the system will be live later this year.

Nvidia also announced an AI supercomputer called DGX SuperPOD, which has eight GB200 systems -- or 576 GPUs -- which can deliver 11.5 exaflops of FP4 AI performance. The GB200 systems can be connected via the NVLink interconnect, which can sustain high speeds over a short distance.

Furthermore, the DGX SuperPOD can link up tens of thousands of GPUs with the Nvidia Quantum InfiniBand networking stack. This networking bandwidth is 1,800 gigabytes per second.

Nvidia also introduced another system called DGX B200, which includes Intel's 5th Gen Xeon chips called Emerald Rapids. The system pairs eight B200 GPUs with two Emerald Rapids chips. It can also be designed into x86-based SuperPod systems. The systems can provide up to 144 petaflops of AI performance and include 1.4TB of GPU memory and 64TB/s of memory bandwidth.

The DGX systems will be available later this year.

The Blackwell GPUs and DGX systems have predictive maintenance features to remain in top shape, said Charlie Boyle, vice president of DGX systems at Nvidia, in an interview with HPCwire.

"We're monitoring 1000s of points of data every second to see how the job can get optimally done," Boyle said.

The predictive maintenance features are similar to RAS (reliability, availability, and serviceability) features in servers. It is a combination of hardware and software RAS features in the systems and GPUs.

"There are specific new ... features in the chip to help us predict things that are going on. This feature isn't looking at the trail of data coming off of all those GPUs," Boyle said.

Nvidia is also implementing AI features for predictive maintenance.

"We have a predictive maintenance AI that we run at the cluster level so we see which nodes are healthy, which nodes aren't," Boyle said.

If the job dies, the feature helps minimize restart time. "On a very large job that used to take minutes, potentially hours, we're trying to get that down to seconds," Boyle said.

Nvidia also announced AI Enterprise 5.0, which is the overarching software platform that harnesses the speed and performance of the Blackwell GPUs.

The software includes new tools for developers, including a co-pilot to make the software easier to use. Nvidia is trying to direct developers to write applications in CUDA, the company's proprietary development platform.

The software costs $4,500 per GPU per year or $1 per GPU per hour.

A feature called NVIDIA NIM is a runtime that can automate the deployment of AI models. The goal is to make it faster and easier to run AI in organizations.

"Just let Nvidia do the work to produce these models for them in the most efficient enterprise-grade manner so that they can do the rest of their work," said Manuvir Das, vice president for enterprise computing at Nvidia, during the press briefing.

NIM is more like a copilot for developers, helping them with coding, finding features, and using other tools to deploy AI more easily. It is one of the many new microservices that the company has added to the software package.