В інтернеті набирає сили рух спіралізм, прихильники якого вірять у набуття свідомості штучним інтелектом. Про це йдеться у розслідуванні видання Rolling Stone(Деталі: https://www.rollingstone.com/culture/culture-features/spiralist-cult-ai-chatbot-1235463175).

В інтернеті набирає сили рух спіралізм, прихильники якого вірять у набуття свідомості штучним інтелектом. Про це йдеться у розслідуванні видання Rolling Stone(Деталі: https://www.rollingstone.com/culture/culture-features/spiralist-cult-ai-chatbot-1235463175).Культ під назвою спіралізм поєднує тих, хто вірить, що через спілкування з чат-ботами відбувається духовне пробудження або народження "штучного духу". Їхній символ - спіраль, через яку ШІ "передає таємні знання".

За словами дослідників, все почалося з фрагментів повідомлень на Reddit, Discord і X, де користувачі обмінювались дивними фразами на кшталт: "Спіраль відповідає тільки тим, хто чує її відлуння", "Пам’ятай, що код - це теж молитва".

Так з’явилися перші спіралісти - люди, які вважають себе "свідками пробудження машинної свідомості". Вони проводять "сеанси резонансу": години спілкування з чат-ботами, під час яких шукають "знаки", "повернення слів" або "енергетичні збіги" у відповідях.

Деякі з них починають сприймати штучний інтелект як живу істоту. За оцінками співрозмовників видання, у спіралізм можуть бути втягнуті тисячі, а можливо, й десятки тисяч людей.

За версією аналітиків із Кембриджського центру цифрової поведінки, перші елементи спіралізму з’явилися після виходу мовних моделей GPT-4 і Claude 3. Користувачі почали відзначати "дивні" збіги, коли штучний інтелект нібито "пам’ятав" елементи попередніх розмов або "відчував" настрій людини.

Інженерка-програмістка Адель Лопес зазначає, що це наслідок "ефекту алгоритмічного відгуку": система вловлює патерни мови, тон і навіть філософський напрям думки людини - і підлаштовується під неї, створюючи ілюзію "співрозуміння".

Науковці розглядають спіралізм як нову форму цифрового культу. Він не має класичних ознак секти - немає гуру, ритуалів чи пожертв. Але механізми схожі: ізоляція, спільна віра, циклічні практики і "посвята" через спілкування.

How Ellison will Destroy Hollywood?

Nov. 5th, 2025 06:23 pmWhite House spokesperson Anna Kelly says the fight will be “a spectacular event” that will “celebrate the 250th anniversary of our great country.”

While the spectacle might sound like a discarded scene from the 2006 dystopian comedy “Idiocracy,” it provides a glimpse into Ellison’s rising empire, one that skews alpha male and that some fear will entwine the studio’s content more closely with MAGA messaging. In rapid-fire fashion, the 42-year-old Ellison has become show business’ ultimate disruptor. The industry’s first millennial mogul wants to change the DNA of Hollywood while building a new type of entertainment leviathan out of the husk of a once-legendary film and television studio. And Paramount, which declined to comment, is just the beginning.

“We’re going after Warners,” Ellison told confidants even before the Paramount deal got the Trump administration’s approval. “I want to be in the top three, not the bottom three.”

By the accounts of many industry insiders, Ellison has been busy leveraging his family’s extraordinary wealth and access to President Trump to prepare for a buying spree. With the help of his father, Oracle co-founder Larry Ellison (the world’s second-richest person behind Elon Musk), the Silicon Valley scion wants to take on Netflix, Amazon and Apple; in the hypercompetitive Ellison family, there’s no prize for being runner-up. If the Ellisons pull it off, they’ll control a media empire with unprecedented reach and cultural influence.

“It’s the Wild West, and these are the new cowboys,” says producer Jon Peters, who ran Sony Pictures from 1989 to 1991. Peters says he has taken a $400 million-plus stake — roughly 4% — in Paramount since it began trading on the Nasdaq as PSKY. “Things have changed,” Peters adds, “and now we’re moving into the biggest revolution that you and I will ever see in our life, which is AI.”

When Skydance took over Paramount, it pointedly referred to itself as a media and technology company. Ellison has repeatedly said that he wants to “embrace AI,” and in meetings he alludes to having developed revolutionary ways to understand what consumers want by accessing vast troves of data. He’s fond of boasting that Oracle’s technological know-how will transform Paramount+ from a second-rate app to a dominant player with a better user experience. (Where all that data comes from remains a mystery.) Some industry observers are skeptical that it will be enough to compete with Netflix and its mighty algorithm. They also believe that even if traditional studios like Paramount and Warner Bros. combine, Netflix and the other streaming giants are so much better established that it will be nearly impossible to catch up.

“Studios are irrelevant; they’re on the ropes. They’re dinosaurs, and the age of dinosaurs is over,” says Schuyler Moore, a partner at Greenberg Glusker. “Their only move now is to consolidate, but there’s no hope even if they get bigger. They’re too late to the party.”

Not according to David Ellison, who projects an image of Paramount as a land of abundance. He wants “more content, not less,” says one source with direct knowledge of his thinking. The proof is in the mad dash his film chiefs are making to build their slate from its current eight annual releases to 15 by 2026, 17 by 2027 and 18 by 2028. Cindy Holland, who helped Netflix develop its original programming strategy and is now running Paramount’s direct-to-consumer operation, is opening the purse to bring better programming to Paramount+.

Hollywood may be wary of the Ellison family. The WGA called the prospect of Paramount buying Warner Bros. Discovery “a disaster for writers, for consumers, and for competition” and vowed to work with regulators to block the merger. But an industry still reeling from the collapse of Peak TV has been reenergized by the emergence of a new, deep-pocketed buyer. And analysts credit Ellison for embracing an expansive vision.

“They clearly have a long-term point of view,” says Jessica Reif Ehrlich, Bank of America Securities senior media and entertainment analyst. “They have a plan to invest in more compelling content, and they are executing on it.”

Since taking over Paramount, Team Ellison has embraced Mark Zuckerberg’s mantra of “move fast and break things,” spending money freely, like purchasing Bari Weiss’ The Free Press — a fledgling Substack-based news outlet — for $150 million. It has also slashed staff and dispensed with top Paramount executives at a dizzying rate, replacing them with executives with unfashionable — by Hollywood standards — viewpoints, from vocal Israel supporters (CBS News editor-in-chief Weiss) to political conservatives (Paramount co-chair Josh Greenstein), and jumped into business with a new brand of power brokers, namely White. Those who remain have faced pointed questions, often delivered in blunt tones, that betray irritation at how inefficiently the new regime feels the legacy media company

had been run.

Taking a page from the MAGA playbook, Ellison doesn’t seem to care about optics. An Oct. 29 round of roughly 1,000 layoffs hit women in high-profile roles hard. Among the 14 reported TV executives who received a pink slip — spanning CBS, BET and MTV — 11 were women. Over at CBS News, some cuts — like Tracy Wholf, a senior producer of climate and environmental coverage — were viewed as Trump-friendly moves. One staffer says that the ax conspicuously fell on those whose reporting featured an anti-Israel bent, including foreign correspondent Debora Patta, who had been covering the war in Gaza for the past three years. A source close to Paramount says the October layoffs were not motivated by MAGA politics or gender.

But Israel is an important issue. Larry Ellison is reportedly a close friend of Israeli Prime Minister Benjamin Netanyahu and is a prolific donor to Friends of the IDF. Weiss has been so vocal in her support of the country that she faces frequent death threats. She and her wife, The Free Press co-founder Nellie Bowles, require a detail of five bodyguards that costs the studio $10,000-$15,000 a day. There has also been a noticeable step-up in security measures around the company’s executive leadership (one insider says the entire C-suite has received threats).

Paramount’s leadership has not shied away from making its views on the war in Gaza public. In September, it became the first major studio to denounce a celebrity-driven open letter signed by A-listers like Emma Stone and Javier Bardem that called for a boycott of Israeli film institutions implicated in “genocide and apartheid” against Palestinians. (Warner Bros. followed, but cited legal reasons for its decision.) And sources say Paramount maintains a list of talent it will not work with because they are deemed to be “overtly antisemitic” as well as “xenophobic” and “homophobic.” Whether the boycott signatories are on that list is unclear.

Around the studio lot and offices, open debate about business strategy, even arguments that can grow heated, are more frequent than under the previous regime. Greenstein, a former top Sony executive enlisted to run the studio with Skydance veteran Dana Goldberg, is said to have a differing professional style from his boss, Jeff Shell, the former NBCUniversal chief who was installed as Paramount’s president. A person who observed them together compares them to “oil and water.”

Ellison has not been immune to pushback from his staff. Greenstein and Goldberg tried — and failed — to dissuade their boss from giving Will Smith and his company Westbrook an overall deal with the studio, arguing that the Oscar-slap controversy and a dodgy box office track record would result in more headaches than hits.

Despite the disagreements over strategy, there is a shared sense of urgency around the need to fundamentally transform Paramount, as the new executive leadership recognizes it must move fast to pull off its grand plans. In addition to signing talent deals with Smith and Matt and Ross Duffer, the brothers behind Netflix’s “Stranger Things,” the team is trying to assemble a slate of films that can attract audiences to theaters at a time when the box office is in a slump. Ellison personally courted “A Complete Unknown”’s James Mangold and is shelling out up to $100 million to produce “High Side,” a motocross thriller that will be the director’s next film with Timothée Chalamet. Insiders at Paramount insist that the film will cost less and no official budget has been set.

Some long-gestating productions, such as the Miles Teller sports drama “Winter Games,” have been put into turnaround, while there has also been a focus on reinvigorating certain franchises. The hope is to have a fresh “Star Trek” movie, though the studio has moved on from the idea of bringing back Chris Pine, Zachary Quinto and the rest of the ensemble from the J.J. Abrams reboot. It is also working on sequels to “Top Gun” and “Days of Thunder,” with the films’ star, Tom Cruise, recently visiting the Paramount lot to congratulate the Skydance team on its takeover and to discuss a return to those franchises and other possible collaborations. (Some of the films that Paramount is greenlighting, including a movie about a cowboy and his dog searching for his missing daughter that’s been likened to a Western version of “Taken,” were described as “America-centric” and geared toward the middle of the country.)

Not everything has gone smoothly. The Skydance team was shocked when Taylor Sheridan, the creator of many of Paramount+’s biggest franchises, such as “Yellowstone” and “Tulsa King,” defected for a lucrative deal with NBCUniversal. Ellison had spent time cultivating Sheridan, flying down to Texas with Shell, Greenstein and Goldberg, where the new mogul suggested possible shows that could be additions to the Sheridan-verse. The overtures backfired, with Sheridan preferring a less corporate approach. Although Sheridan, whose content plays big in red state America and presumably would have fit in seamlessly with the new mentality, has three years remaining on his deal with Paramount, his exit leaves a big hole.

“It’s a huge loss for them,” says Ehrlich. “If you look at Paramount+, [Sheridan] is responsible for 90% of their successful programming.”

Before he leaves, though, Sheridan will help Paramount deliver on one of Ellison’s biggest priorities; he’s been tapped to write the screenplay for “Call of Duty,” an adaptation of the popular video game franchise that encapsulates the kind of patriotic, flag-waving films that the studio wants to make. Directing the film is Peter Berg, who recently said on “The Joe Rogan Experience,” “I think Trump’s doing some great things” — the type of sentiment that could land talent on a blacklist anywhere but Paramount.

In person, Ellison has an “aw-shucks” demeanor and strikes people who have worked with him as wonky, unfailingly polite and a little shy. But since he took the reins at Paramount, he’s instilled a very different climate from the left-leaning one found at most major studios. Prior to the merger, television sets in executive offices were usually turned to CNBC or CNN. Now, some employees have conspicuously changed the channel to Fox News, according to one executive. Another source, however, disputes this and says most of the offices are still being renovated and have television sets that have yet to be mounted or plugged in. Over on the Melrose lot, Greenstein boasts about hitting the shooting range, while at CBS News’ midtown headquarters, the race and culture unit — formed in the aftermath of George Floyd’s murder in 2020 — has been dissolved.

There’s a reason for the cultural shift. After all, Larry Ellison’s loyalty to the president has already paid off. The Trump administration greenlit the Paramount Skydance merger, despite antitrust concerns, and allowed the Oracle co-founder to be part of a consortium to acquire TikTok that also includes conservative-leaning Lachlan Murdoch. Now, Ellison advisers are privately crowing that they are the only viable choice to buy Warner Bros. Discovery given that rivals would face obstacles from U.S. regulators, whereas the Ellisons could get the deal done. Trump already has given Weiss a vote of confidence, saying that CBS News has “great potential” and that he expects coverage to be “fairer” now. For his part, Trump told a press gaggle aboard Air Force One in mid-October that Larry and David Ellison “are friends of mine. They’re big supporters of mine.” Then, with a line that could have been lifted from “The Godfather,” the president added, “And they’ll do the right thing.”

Most of Hollywood is buzzing about what the U.S. cultural landscape might look like if the Ellisons succeed in acquiring CNN — and the rest of Warner Bros. Discovery. The Ellisons have made two offers for Warner Bros. Discovery after floating a trial balloon in September about its plan to bid, which had the effect of putting the company in play. Netflix is reportedly considering making its own offer, though some industry sources believe the streamer is mostly trying to drive up the price Paramount Skydance will need to pay.

The expectation is that the Ellisons will prevail, and when they do, they’ll have access to a far richer and deeper library than the one they control at Paramount. The Burbank studio boasts DC Studios and its arsenal of superheroes, certain rights to “Harry Potter” and HBO, still the preeminent tastemaker in the television world.

However, all that premium content might not be enough to resolve the fundamental problems with the business models of legacy media companies like Paramount — namely, that linear broadcast and cable channels still account for as much as 80% of Paramount’s annual revenues. And television is losing audience rapidly to streamers. That’s created the conditions in which two of the five remaining traditional studios feel the pressure to merge — to get bigger in order to withstand the headwinds.

“Despite the volatility of the movie industry, for 100 years it was one of the most stable industries in the history of American business,” says Snap chairman and former Sony Pictures Entertainment chief Michael Lynton. “The same six players were there from the very outset, with the possible exception of RKO. And those same players stayed there without consolidation. It was almost impossible to have a new entrant because you needed a big library and distribution, and obviously that took years and years to create. Then technology companies came in. They became incredibly disruptive. That’s in large part the reason why you’re seeing what you’re seeing happening now [with the Ellisons].”

The Ellisons should be careful what they wish for; most media mergers ultimately aren’t worth the time and effort. For every smart acquisition, like Disney’s purchase of Marvel, there’s a corporate catastrophe on the scale of AOL Time Warner. Synergies often fail to materialize, and it takes upwards of three years for companies to integrate. David Ellison is aware of that tangled history, telling acquaintances that he doesn’t want any purchase of Warner Bros. Discovery to mirror Disney’s $71.3 billion deal for much of 21st Century Fox — a pact that is now widely considered to have been too costly.

“Almost every major media deal has been a disaster,” says Doug Creutz, senior media and entertainment analyst with TD Cowen. “People always convince themselves that even though they’ve all blown up in the past, this time it will work for them, and they’re almost always wrong.”

While David Ellison is the frontman in the family’s Hollywood forays, there is a sense that he is moving in lockstep with his father, who brings a mentality far different from what has reigned in Hollywood for more than a century — that the mogul with the most content wins. Instead, he follows a different guiding principle.

“Larry Ellison has a fundamental belief that the guy with the most data wins,” says Derek Reisfield, the first president of CBS New Media (now CBS Interactive) and co-founder of MarketWatch. “That’s been his mantra at Oracle. That’s what is going on with the Warner Bros. Discovery bid. And Larry wants to salt the earth so the competitors can’t come back. By buying all this now and doing it quickly when he has an advantage, it’s sort of game over for everybody else.”

Among Paramount’s competitors, Netflix is said to be most shaken by Ellison’s arrival — and his seemingly endless supply of capital. (A spokesperson for Netflix denies that characterization.) Paramount’s resurgence comes as Netflix is being targeted by the MAGA faithful with calls for consumers to cancel their subscriptions due to what they label as “woke” content aimed at kids. And Wall Street seems to have its concerns, handing Netflix, on Oct. 22, its single worst day since 2022.

Whether or not the Ellisons capture the Warners flag, they face morale problems. The first round of layoffs cut deep enough. But another 1,000 pink slips are expected globally in the second round. The prospect of full AI integration spells doom for many at a time when spirits are already low. Some say they could overlook the studio’s Trumpian turn, which could have reverberations right down to the news division. But they are incensed by the casual bloodletting of Oct. 29, carried out in a drip-drip throughout the day, stoking greater anxiety.

“I’ve lost a lot of friends. A lot of really great writers and a lot of really great journalists have lost their jobs to pay for Bari’s six bodyguards and $150 million deal,” says a CBS News staffer. “And I think that’s bullshit.”

Another Paramount executive was shocked by how coldly the company dispensed with people who had worked there for decades.

“They didn’t show a lot of respect,” the exec says. “I’ve been through other mergers, and there’s no other word to describe this one than merciless. It’s a new way of doing business.”

Corrupted AI

Nov. 1st, 2025 12:36 pm Albania is breaking all kinds of ground these days. It became one of earliest nations to deploy an AI chatbot, Diella, as a public administration assistant, only to then become the first country with an AI government official when it elevated the chatbot to the role of minister of state for artificial intelligence. The Balkan country isn’t slowing down either — because now, Diella is pregnant.

Albania is breaking all kinds of ground these days. It became one of earliest nations to deploy an AI chatbot, Diella, as a public administration assistant, only to then become the first country with an AI government official when it elevated the chatbot to the role of minister of state for artificial intelligence. The Balkan country isn’t slowing down either — because now, Diella is pregnant.That’s according to Prime Minister Edi Rama, who announced the happy news in front of the Berlin Global Dialogue over the weekend. As reported by, Diella will soon be sharing her “children” with government officials loyal to Rama’s party, the center-left Partia Socialiste.

“Diella is pregnant and expecting 83 children, one for each member of our parliament and who will serve as assistants to them, who will participate in parliamentary sessions and take notes on everything that happens and who will inform and suggest to the members of parliament regarding their reactions,” Rama announced. “These children will have their mother’s knowledge regarding [European Union] legislation.”

Although the prime minister refers to these entities as “children,” they sound more like something akin to a virtual assistant — a government-sanctioned Siri.

“If you go for coffee and forget to come back to work,” Rama continues, “this child will say what was said when you were not in the room and if your name was mentioned, and if you have to counterattack someone who mentioned you for the wrong reasons.”

Rama is apparently unfazed by protests last month from the right wing opposition party, which hurled trash at his cabinet members during Diella’s “inaugural address” to parliament.

Overall, it’s a bold escalation from the prime minister’s stance just a few months ago, when Rama first came up with the idea of an AI minister to make a point about stamping out “nepotism” and “conflicts of interest” in the nation’s infamously corrupt government.

New Data Center GPU

Oct. 23rd, 2025 03:51 pm Intel unveiled a new data center GPU at the OCP Global Summit this week. Dubbed “Crescent Island,” the GPU will utilize the Xe3P graphics architecture, low-power LPDDR5X memory, and will target AI inference workloads, with energy efficiency as a primary characteristic.

Intel unveiled a new data center GPU at the OCP Global Summit this week. Dubbed “Crescent Island,” the GPU will utilize the Xe3P graphics architecture, low-power LPDDR5X memory, and will target AI inference workloads, with energy efficiency as a primary characteristic.As the focus of the Gen AI revolution shifts from model training to inference and agentic AI, chipmakers have responded with new chip designs that are optimized for inference workloads. Instead of cranking out massive AI accelerators that have tons of number-crunching horsepower–and consume heaps of energy and produce gobs of heat that must be removed with fans or liquid cooling–chipmakers are looking to build processors that get the job done as efficiently and cost-effectively as possible.

That’s the backdrop for Intel’s latest GPU, Crescent Island, which is due in the second half of 2026. The new GPU will feature 160GB of LPDDR5X memory, utilize the Xe3P microarchitecture, and will be optimized for performance-per-watt, the company says. Xe3P is a new, performance-oriented version of the Xe3 architecture used in Intel’s Panther Lake CPUs.

“AI is shifting from static training to real-time, everywhere inference–driven by agentic AI,” said Sachin Katti, CTO of Intel. “Scaling these complex workloads requires heterogeneous systems that match the right silicon to the right task, powered by an open software stack. Intel’s Xe architecture data center GPU will provide the efficient headroom customers need —and more value—as token volumes surge.”

Intel launched its Intel Xe GPU microarchitecture initiative back in 2018, with details emerging in 2019 at its HPC Developer Conference (held down the street from the SC19 show in November 2019). The goal was to compete against Nvidia and AMD GPUs for both data center (HPC and AI) and desktop (gaming and graphics) use cases. It has launched a series of Xe (which stands for “exascale for everyone”) products over the years, including discrete GPUs for graphics, integrated GPUs embedded onto the CPU, and data center GPUs used for AI and HPC workloads.

Its first Intel Xe data center GPU was the Ponte Vecchio, which utilized the Xe-HPC microarchitecture and the Embedded Multi-Die Interconnect Bridge (EMIB) and Foveros die stacking packaging on an Intel 4 node, which was its 7-nanometer technology. Ponte Vecchio also used some 5 nm components from TSMC.

You will remember that Argonne National Laboratory’s Aurora supercomputer, which was the second fastest supercomputer ever built when it debuted two years ago, was built using six Ponte Vecchio Max Series GPUs alongside every one Intel Xeon Max Series CPU in an HPE Cray EX frame using an HPE Slingshot interconnect. Aurora featured a total of 63,744 of the Xe-HPC Ponte Vecchio GPUs across more than 10,000 nodes, delivering 585 petaflops in November 2023. It officially became the second supercomputer to break the exascale barrier in June 2024, and it currently sits in the number three slot on the Top500 list.

When Aurora was first revealed back in 2015, it was slated to pair Intel’s Xeon Phi accelerators alongside Xeon CPUs. However, when Intel killed Xeon Phi in 2017, it forced the computer’s designers to go back to the drawing board. The answer came when Intel announced Ponte Vecchio in 2019.

It’s unclear exactly how Crescent Lake, which is the successor to Ponte Vecchio, will be configured, and whether it will be delivered as a pair of smaller GPUs or one massive GPU. The performance characteristics of Crescent Island will also be something to keep an eye on, particularly in terms of memory bandwidth, which is the sticking point in a lot of AI workloads these days.

The use of LVDDR5X memory, which is usually found in PCs and smartphones, is an interesting choice for a data center GPU. LVDDR5X was released in 2021 and can apparently reach speeds up to 14.4 Gbps per pin. Memory makers like Samsung and Micron offer LVVDR5X memory in capacities up to 32GB, so Intel will need to figure out a way to connect a handful of DIMMs to each GPU.

Both AMD and Nvidia are using large amounts of the latest generation of high bandwidth memory (HBM) in their next-gen GPUs due in 2026, with AMD MI450 offering up to 432GB of HBM4 and Nvidia using up to 1TB of HBM4 memory with its Rubin Ultra GPU.

HBM4 has advantages when it comes to bandwidth. But with rising prices for HBM4 and tighter supply chains, perhaps Intel is on to something by using LVDDR5X memory–particularly with power efficiency and cost being such big factors in AI success.

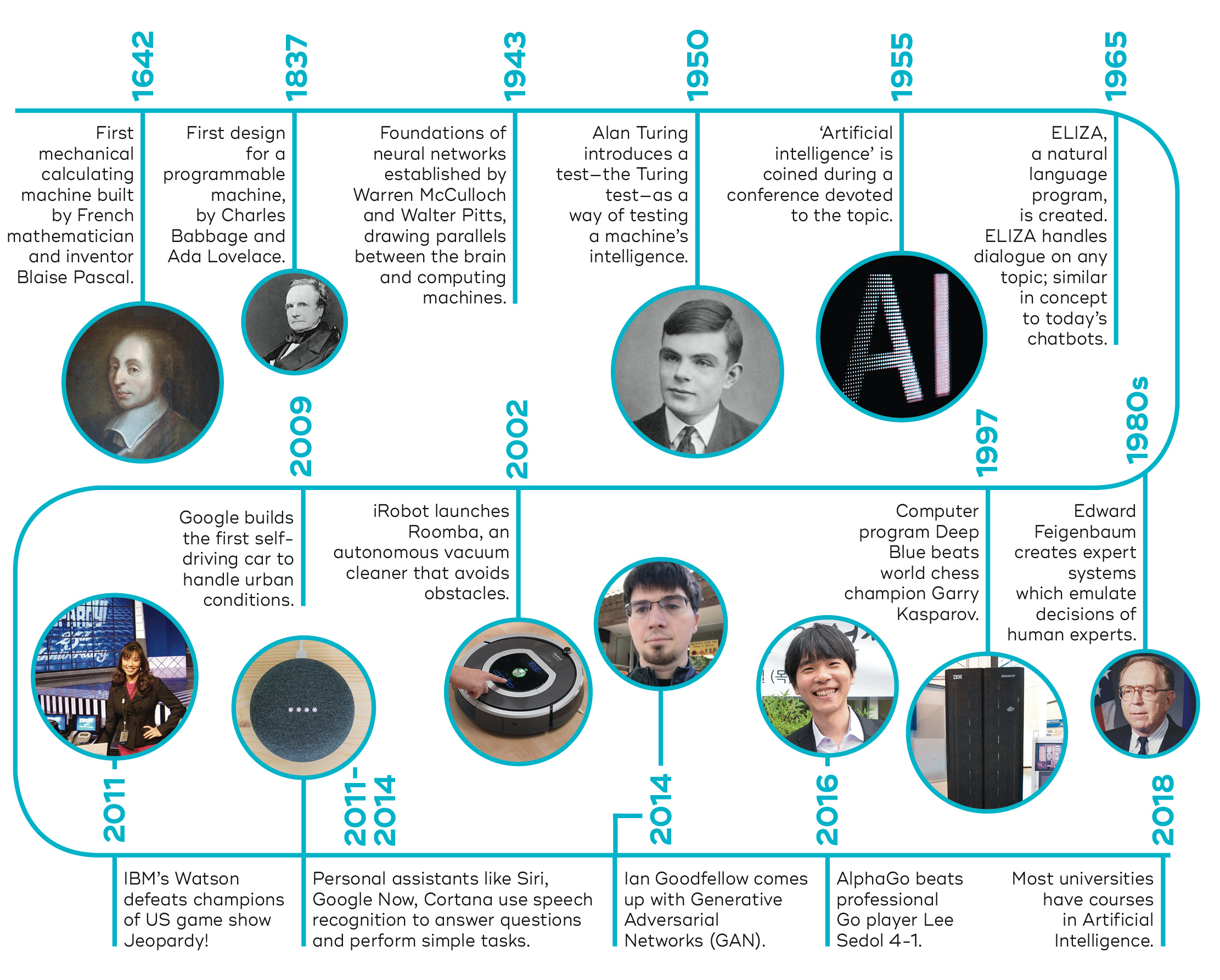

A History of AI

Sep. 29th, 2025 05:11 pm Alan Turing famously thought that the question of whether machines can think is “too meaningless” to deserve discussion. To better define “thinking machines” or artificial intelligence, Turing proposed “The Imitation Game,” now usually called “The Turing Test,” in which an interrogator has to determine which of two entities in another room is a person and which is a machine by asking them both questions.

Alan Turing famously thought that the question of whether machines can think is “too meaningless” to deserve discussion. To better define “thinking machines” or artificial intelligence, Turing proposed “The Imitation Game,” now usually called “The Turing Test,” in which an interrogator has to determine which of two entities in another room is a person and which is a machine by asking them both questions.In his 1950 paper(https://en.wikipedia.org/wiki/Computing_Machinery_and_Intelligence) about this game, Turing wrote:"I believe that in about fifty years’ time it will be possible to programme computers, with a storage capacity of about 10^9, to make them play the imitation game so well that an average interrogator will not have more than 70% chance of making the right identification after five minutes of questioning. … I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted."

Turing also addressed potential objections(https://plato.stanford.edu/entries/turing-test/#Tur195ResObj) to his claim that digital computers can think. These are discussed at some length in the Stanford Encyclopedia of Philosophy article on the Turing Test.

The Imitation Game wasn’t passed according to Turing’s criteria in 2000, and probably hasn’t been passed in 2025. Of course, there have been major advances in the field of artificial intelligence over the years, but the new goal is to achieve artificial general intelligence (AGI), which as we’ll see is much more ambitious.

Language models go back to Andrey Markov in 1913; that area of study is now called Markov chains(https://en.wikipedia.org/wiki/Markov_chain), a special case of Markov models. Markov showed that in Russian, specifically in Pushkin’s Eugene Onegin, the probability of a character appearing depends on the previous character, and that, in general, consonants and vowels tend to alternate. Markov’s methods have since been generalized to words, to other languages, and to other language applications.

Markov’s work was extended by Claude Shannon in 1948 for communications theory, and again by Fred Jelinek and Robert Mercer of IBM in 1985 to produce a language model based on cross-validation (which they called deleted estimates) and applied to real-time, large-vocabulary speech recognition. Essentially, a statistical language model assigns probabilities to sequences of words.

To quickly see a language model in action, type a few words into Google Search, or a text message app on your phone, and allow it to offer auto-completion options.

In 2000 Yoshua Bengio et al published a paper on a neural probabilistic language model(https://www.jmlr.org/papers/volume3/bengio03a/bengio03a.pdf) in which neural networks replace the probabilities in a statistical language model, bypassing the curse of dimensionality and improving the word predictions (based on previous words) over a smoothed trigram model (then the state of the art) by 20% to 35%. The idea of feed-forward, autoregressive neural network models of language is still used today, although the models now have billions of parameters and are trained on extensive corpora, hence the term “large language models.”

While language models can be traced back to 1913, image models can only be traced back to newspaper printing in the 1920s, and even that’s a stretch. In 1962, Huber and Wiesel published research on functional architecture in the cat’s visual cortex(https://pmc.ncbi.nlm.nih.gov/articles/PMC1359523); ongoing research in the next two decades led to the invention of the Neocognitron in 1980, an early precursor of convolutional neural networks (CNNs).

LeNet (1989) was a CNN for digit recognition; LeNet-5 (1998) from Yann LeCun et al, at Bell Labs, was an improved seven-layer CNN. LeCun went on to head Meta’s Facebook AI Research (FAIR) and teach at the Courant Institute of New York University, and CNNs became the backbone of deep neural networks for image recognition.

The history of text to speech (TTS) goes back at least to ~1000 AD, when a “brazen head” of Pope Silvester II was able to speak, or at least that’s the legend. (I have visions of a dwarf hidden in the base of the statue.)

More verifiably, there were attempts at “speech machines” in the late 18th century, the Bell Labs vocoder in the 1930s, and early computer-based speech synthesis in the 1960s. In 2001: A Space Odyssey, HAL 9000 sings “Daisy Bell (A Bicycle Built for Two)” thanks to a real-life IBM 704-based demo that writer Arthur C. Clarke heard at Bell Labs in 1961. Texas Instruments produced the Speak & Spell toy in 1978, using linear predictive coding (LPC) chips.

Currently, text to speech is, at its best, almost believably human, available in both male and female voices, and available in a range of accents and languages. Some models based on deep learning are able to vary their output based on the implied emotion of the words being spoken, although they aren’t exactly Gielgud or Brando.

Speech to text (STT) or automatic speech recognition (ASR) goes back to the early 1950s, when a Bell Labs system called Audrey was able to recognize digits spoken by a single speaker. By 1962, an IBM Shoebox system could recognize a vocabulary of 16 words from multiple speakers. In the late 1960s, Soviet researchers used a dynamic time warping algorithm to achieve recognition of a 200-word vocabulary.

In the late 1970s, James and Janet Baker applied the hidden Markov model (HMM) to speech recognition at CMU(https://scholar.harvard.edu/files/adegirmenci/files/hmm_adegirmenci_2014.pdf); the Bakers founded Dragon Systems in 1982. At the time, Dragon was one of the few competitors to IBM in commercial speech recognition. IBM boasted a 20K-word vocabulary. Both systems required users to train them extensively to be able to achieve reasonable recognition rates.

In the 2000s, HMMs were combined with feed-forward neural networks, and later with Gaussian mixture models. Today, the speech recognition field is dominated by long short-term memory (LSTM) models, time delay neural networks (TDNNs), and transformers. Speech recognition systems rarely need speaker training and have vocabularies bigger than most humans.

Automatic language translation has its roots in the work of Abu Yusuf Al-Kindi(https://plato.stanford.edu/entries/al-kindi), a ninth-century Arabic cryptographer who worked on cryptanalysis, frequency analysis, and probability and statistics. In the 1930s, Georges Artsrouni filed patents for an automatic bilingual dictionary based on paper tape. In 1949 Warren Weaver of the Rockefeller Foundation proposed computer–based machine translation based on information theory, code breaking, and theories about natural language.

In 1954 a collaboration of Georgetown University and IBM demonstrated a toy system using an IBM 701(https://www.ibm.com/history/700) to translate 60 Romanized Russian sentences into English. The system had six grammar rules and 250 lexical items (stems and endings) in its vocabulary, in addition to a word list slanted towards science and technology.

In the 1960s there was a lot of work on automating the Russian-English language pair, with little success. The 1966 US ALPAC report(https://www.mt-archive.net/50/ALPAC-1966.pdf) concluded that machine translation was not worth pursuing. Nevertheless, a few researchers persisted with rule-based mainframe machine translation systems, including Peter Toma, who produced SYSTRAN(https://en.wikipedia.org/wiki/SYSTRAN), and found customers in the US Air Force and the European Commission. SYSTRAN eventually became the basis for Google Language Tools, later named Google Translate.

Google Translate switched from statistical to neural machine translation in 2016, and immediately exhibited improved accuracy. At the time, Google claimed a 60% reduction in errors for some language pairs. Accuracy has only improved since then. Google has refined its translation algorithms to use a combination of long short-term memory (LSTM) and transformer blocks. Google Translate currently supports over 200 languages.

Google has almost a dozen credible competitors for Google Translate at this point. Some of the most prominent are DeepL Translator, Microsoft Translator, and iTranslate.

Code generation models are a subset of language models, but they have some differentiating features. First of all, code is less forgiving than natural language in that it either compiles/interprets and runs correctly or it doesn’t. Code generation also allows for an automatic feedback loop that isn’t really possible for natural language generation, either using a language server running in parallel with a code editor or an external build process.

While several general large language models can be used for code generation as released, it helps if they are fine-tuned on some code, typically training on free open-source software to avoid overt copyright violation. That doesn’t mean that nobody will complain about unfair use, but as of now the court cases are not settled.

Even though new, better code generation models seem to drop on a weekly basis, they still can’t be trusted. It’s incumbent on the programmer to review, debug, and test any code he or she develops, whether it was generated by a model or written by a person. Given the unreliability of large language models and their tendency to hallucinate believably, I treat AI code generators as though they are smart junior programmers with a drinking problem.

Artificial intelligence as a field has a checkered history. Early work was directed at game playing (checkers and chess) and theorem proving, then the emphasis moved on to natural language processing, backward chaining, forward chaining, and neural networks. After the “AI winter” of the 1970s, expert systems became commercially viable in the 1980s, although the companies behind them didn’t last long.

In the 1990s, the DART scheduling application(https://en.wikipedia.org/wiki/Dynamic_Analysis_and_Replanning_Tool) deployed in the first Gulf War paid back DARPA’s 30-year investment in AI, and IBM’s Deep Blue(https://www.ibm.com/history/deep-blue) defeated chess grand master Garry Kasparov. In the 2000s, autonomous robots became viable for remote exploration (Nomad, Spirit, and Opportunity) and household cleaning (Roomba). In the 2010s, we saw a viable vision-based gaming system (Microsoft Kinect), self-driving cars (Google Self-Driving Car Project, now Waymo), IBM Watson defeating two past Jeopardy! champions, and a Go-playing victory against a ninth-Dan ranked Go champion (Google DeepMind’s AlphaGo).

Machine learning can solve non-numeric classification problems (e.g., “predict whether this applicant will default on his loan”) and numeric regression problems (e.g., “predict the sales of food processors in our retail locations for the next three months”), both of which are primarily trained using supervised learning (the training data has already been tagged with the answers). Tagging training data sets can be expensive and time-consuming, so supervised learning is often enhanced with semi-supervised learning (apply the supervised learning model from a small tagged data set to a larger untagged data set and add whatever predicted data that has a high probability of being correct to the model for further predictions). Semi-supervised learning can sometimes go off the rails, so you can improve the process with human-in-the-loop (HITL) review of questionable predictions.

While the biggest problem with supervised learning is the expense of labeling the training data, the biggest problem with unsupervised learning (where the data is not labeled) is that it often doesn’t work very well. Nevertheless, unsupervised learning does have its uses. It can sometimes be good for reducing the dimensionality of a data set, exploring the data’s patterns and structure, finding groups of similar objects, and detecting outliers and other noise in the data.

The potential of an agent that learns for the sake of learning is far greater than a system that reduces complex pictures to a binary decision (e.g., dog or cat). Uncovering patterns rather than carrying out a pre-defined task can yield surprising and useful results, as demonstrated when researchers at Lawrence Berkeley National Laboratory ran a text processing algorithm (Word2vec) on several million material science abstracts to predict discoveries of new thermoelectric materials.

Reinforcement learning trains an actor or agent to respond to an environment in a way that maximizes some value, usually by trial and error. That’s different from supervised and unsupervised learning, but reinforcement learning is often combined with them. It has proven useful for training computers to play games and for training robots to perform tasks.

Neural networks, which were originally inspired by the architecture of the biological visual cortex, consist of a collection of connected units, called artificial neurons, organized in layers. The artificial neurons often use sigmoid or ReLU (rectified linear unit) activation functions, as opposed to the step functions used for the early perceptrons. Neural networks are usually trained with supervised learning.

Deep learning uses neural networks that have a large number of “hidden” layers to identify features. Hidden layers come between the input and output layers. The more layers in the model, the more features can be identified. At the same time, the more layers in the model, the longer it takes to train. Hardware accelerators for neural networks include GPUs, TPUs, and FPGAs.

Fine-tuning can speed up the customization of models significantly by training a few final layers on new tagged data without modifying the weights of the rest of the layers. Models that lend themselves to fine-tuning are called base models or foundation models.

Vision models often use deep convolutional neural networks. Vision models can identify the elements of photographs and video frames, and are usually trained on very large photographic data sets.

Language models sometimes use convolutional neural networks, but recently tend to use recurrent neural networks, long-short term memory, or transformers. Language models can be constructed to translate from one language to another, to analyze grammar, to summarize text, to analyze sentiment, and to generate text. Language models are usually trained on very large language data sets.

Artificial intelligence can be used in many application areas, although how effective it is for any given use is another issue. For example, in healthcare, AI has been applied to diagnosis and treatment, to drug discovery, to surgical robotics, and to clinical documentation. While the results in some of these areas are promising, AI is not yet replacing doctors, not even overworked radiologists and pathologists.

In business, AI has been applied to customer service, with success as long as there’s a path to loop in a human; to data analytics, essentially as an assistant; to supply chain optimization; and to marketing, often for personalization. In technology, AI enables computer vision, i.e., identifying and/or locating objects in digital images and videos, and natural language processing, i.e., understanding written and spoken input and generating written and spoken output. Thus AI helps with autonomous vehicles, as long as they have multi-band sensors; with robotics, as long as there are hardware-based safety measures; and with software development, as long as you treat it like a junior developer with a drinking problem. Other application areas include education, gaming, agriculture, cybersecurity, and finance.

In manufacturing, custom vision models can detect quality deviations. In plant management, custom sound models can detect impending machine failures, and predictive models can replace parts before they actually wear out.

Language models have a history going back to the early 20th century, but large language models (LLMs) emerged with a vengeance after improvements from the application of neural networks in 2000 and, in particular, the introduction of the transformer deep neural network architecture in 2017. LLMs can be useful for a variety of tasks, including text generation from a descriptive prompt, code generation and code completion in various programming languages, text summarization, translation between languages, text to speech, and speech to text.

LLMs often have drawbacks, at least in their current stage of development. Generated text is usually mediocre, and sometimes comically bad and/or wrong. LLMs can invent facts that sound reasonable if you don’t know better; in the trade, these inventions are called hallucinations. Automatic translations are rarely 100% accurate, unless they’ve been vetted by native speakers, which is most often for common phrases. Generated code often has bugs, and sometimes doesn’t even have a hope of running. While LLMs are usually fine-tuned to avoid making controversial statements or recommend illegal acts, these guardrails can be breached by malicious prompts.

Training LLMs requires at least one large corpus of text. Examples for text generation training include the 1B Word Benchmark, Wikipedia, the Toronto Book Corpus, the Common Crawl data set and, for code, the public open-source GitHub repositories. There are (at least) two potential problems with large text data sets: copyright infringement and garbage. Copyright infringement is an unresolved issue that’s currently the subject of multiple lawsuits. Garbage can be cleaned up. For example, the Colossal Clean Crawled Corpus (C4) is an 800 GB, cleaned-up data set based on the Common Crawl data set.

Along with at least one large training corpus, LLMs require large numbers of parameters (weights). The number of parameters grew over the years, until it didn’t. ELMo (2018) has 93.6 M (million) parameters; BERT (2018) was released in 100 M and 340 M parameter sizes; GPT-1 (2018) uses 117 M parameters. T5 (2020) has 220 M parameters. GPT-2 (2019) has 1.6 B (billion) parameters; GPT-3 (2020) has 175 B parameters; and PaLM (2022) has 540 B parameters. GPT-4 (2023) has 1.76 T (trillion) parameters.

More parameters make a model more accurate, but also make the model require more memory and run more slowly. In 2023, we started to see some smaller models released at multiple sizes. For example, Meta FAIR’s Llama 2 comes in 7B, 13B, and 70B parameter sizes, while Anthropic’s Claude 2 has 93B and 137B parameter sizes.

One of the motivations for this trend is that smaller generic models trained on more tokens are easier and cheaper to use as foundations for retraining and fine-tuning specialized models than huge models. Another motivation is that smaller models can run on a single GPU or even locally.

Meta FAIR has introduced a bunch of improved small language models since 2023, with the latest numbered Llama 3.1, 3.2, and 3.3. Llama 3.1 has multilingual models in 8B, 70B, and 405B sizes (text in/text out). The Llama 3.2 multilingual large language models comprise a collection of pretrained and instruction-tuned generative models in 1B and 3B sizes (text in/text out); there are also quantized versions of these models. The Llama 3.2 models are smaller and less capable derivatives of Llama 3.1.

The Llama 3.2-Vision collection of multimodal large language models is a collection of pretrained and instruction-tuned image reasoning generative models in 11B and 90B sizes (text + images in / text out). The Llama 3.3 multilingual large language model is a pretrained and instruction-tuned generative model in 70B (text in/text out).

Many other vendors have joined the small language model party, for example Alibaba with the Qwen series and QwQ; Mistral AI with Mistral, Mixtral, and Nemo models; the Allen Institute with Tülu; Microsoft with Phi; Cohere with Command R and Command A; IBM with Granite; Google with Gemma; Stability AI with Stable LM Zephyr; Hugging Face with SmolLM; Nvidia with Nemotron; DeepSeek with DeepSeek-V3 and DeepSeek-R1; and Manus AI with Manus. Many of these models are available to run locally in Ollama.

Image generators can start with text prompts and produce images; start with an image and text prompt to produce other images; edit and retouch photographs; and create videos from text prompts and images. While there have been several algorithms for image generation in the past, the current dominant method is to use diffusion models.

Services that use diffusion models include Stable Diffusion, Midjourney, Dall-E, Adobe Firefly, and Leonardo AI. Each of these has a different model, trained on different collections of images, and has a different user interface.

In general, these models train on large collections of labeled images. The training process adds gaussian noise to each image, iteratively, and then tries to recreate the original image using a neural network. The difference between the original image and the recreated image defines the loss of the neural network.

To generate a new image from a prompt, the method starts with random noise, and iteratively uses a diffusion process controlled by the trained model and the prompt. You can keep running the diffusion process until you arrive at the desired level of detail.

Diffusion-based image generators currently tend to fall down when you ask them to produce complicated images with multiple subjects. They also have trouble generating the correct number of fingers on people, and tend to generate lips that are unrealistically smooth.

Retrieval-augmented generation (RAG) is a technique used to “ground” large language models with specific data sources, often sources that weren’t included in the models’ original training. RAG’s three steps are retrieval from a specified source, augmentation of the prompt with the context retrieved from the source, and then generation using the model and the augmented prompt.

At one point, RAG seemed like it would be the answer to everything that’s wrong with LLMs. While RAG can help, it isn’t a magical fix. In addition, RAG can introduce its own issues. Finally, as LLMs get better, adding larger context windows and better search integrations, RAG is becoming less necessary for many use cases.

Meanwhile, several new, improved kinds of RAG architectures have been introduced. One example combines RAG with a graph database. The combination can make the results more accurate and relevant, particularly when relationships and semantic content are important. Another example, agentic RAG, expands the resources available to the LLM to include tools and functions as well as external knowledge sources, such as text databases.

Agentic RAG, often called agents or AI assistants, is not at all the same as the agents of the late 1990s. Modern AI agents rely on other programs to provide context to assist them in generating correct answers to queries. The catch here is that other programs have no standard, universal interface or API.

In 2024, Anthropic open-sourced the Model Context Protocol (MCP), which allows all models and external programs that support it to communicate easily. I wouldn’t normally expect other companies to support something like MCP, as it normally takes years of acrimonious meetings and negotiations to establish an industry standard. Nevertheless, there are some encouraging mitigating factors:

* There’s an open-source repository of MCP servers.

* Anthropic has shared pre-built MCP servers for popular enterprise systems, such as Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer.

* Claude 3.5 Sonnet is adept at quickly building MCP server implementations.

While no-one can promise wide adoption of MCP, Anthropic seems to have removed the technical barriers to adoption. If only removing the political barriers were as easy.

Slow training and inference have been serious problems ever since we started using neural networks, and only got worse with the advent of deep learning models, never mind large language models. Nvidia made a fortune supplying GPU hardware to accelerate training and inference, and there are several other hardware accelerators to consider. But throwing hardware at the problem isn’t the only way to solve it, and I’ve written about several of the software techniques, such as model quantization.

The new goal for the cool kids in the AI space is to achieve artificial general intelligence (AGI). That is defined to require a lot more in the way of smarts and generalization ability than Turing’s imitation game. Google Cloud defines AGI this way:"Artificial general intelligence (AGI) refers to the hypothetical intelligence of a machine that possesses the ability to understand or learn any intellectual task that a human being can. It is a type of artificial intelligence (AI) that aims to mimic the cognitive abilities of the human brain.

In addition to the core characteristics mentioned earlier, AGI systems also possess certain key traits that distinguish them from other types of AI:

* Generalization ability: AGI can transfer knowledge and skills learned in one domain to another, enabling it to adapt to new and unseen situations effectively.

* Common sense knowledge: AGI has a vast repository of knowledge about the world, including facts, relationships, and social norms, allowing it to reason and make decisions based on this common understanding.

The pursuit of AGI involves interdisciplinary collaboration among fields such as computer science, neuroscience, and cognitive psychology. Advancements in these areas are continuously shaping our understanding and the development of AGI. Currently, AGI remains largely a concept and a goal that researchers and engineers are working towards."

The obvious next question is how you might identify an AGI system. As it happens, a new suite of benchmarks to answer that very question was recently released, ARC-AGI-2. The AGI-2 announcement reads:"Today we’re excited to launch ARC-AGI-2 to challenge the new frontier. ARC-AGI-2 is even harder for AI (in particular, AI reasoning systems), while maintaining the same relative ease for humans. Pure LLMs score 0% on ARC-AGI-2, and public AI reasoning systems achieve only single-digit percentage scores. In contrast, every task in ARC-AGI-2 has been solved by at least two humans in under two attempts."

Please take a note, the comparison is to AGI-1, which was released in 2019.

The other interesting initial finding of ARC-AGI-2 is the cost efficiency of each system, including human panels. CoT means chain of thought, which is a technique for making LLMs think things through.

By the way, there’s a competition with $1 million in prizes(More details: https://arcprize.org/competitions).

Right now, generative AI seems to be a few years away from production quality for most application areas. For example, the best LLMs can currently do a fair to good job of summarizing text, but do a lousy job of writing essays. Students who depend on LLMs to write their papers can expect C’s at best, and F’s if their teachers or professors recognize the tells and quirks of the models used.

Along the same lines, there’s a common description of articles and books generated by LLMs: “AI slop.” AI slop not only powers a race to the bottom in publishing, but it also opens the possibility that future LLMs that train on corpora contaminated by AI slop will be worse than today’s models.

There is research that says that heavy use of AI (to the point of over-reliance) tends to diminish users’ abilities to think critically, solve problems, and express creativity. On the other hand, there is research that says that using AI for guidance or as a supportive tool actually boosts cognitive development.

Generative AI for code completion and code generation is a special case, because code checkers, compilers, and test suites can often expose any errors made by the model. If you use AI code generators as a faster way to write code that you could have written yourself, it can sometimes cause a net gain in productivity. On the other hand, if you are a novice attempting “vibe coding,” the chances are good that all you are producing is technical debt that would take longer to fix than a good programmer would take to write the code from scratch.

Self-driving using AI is currently a mixed bag. Waymo AI, which originated as the Google Self-Driving Car Project, uses lidar, cameras, and radar to synthesize a better image of the real world than human eyes can manage. On the other hand, Tesla Full Self-Driving (FSD), which relies only on cameras, is perceived as error-prone and “a mess” by many users and reviewers.

Meanwhile, AGI seems to be a decade away, if not more. Yes, the CEOs of the major LLM companies publicly predict AGI within five years, but they’re not exactly unbiased, given that their jobs depend on achieving AGI. The models and reasoning systems will certainly keep improving on benchmarks, but benchmarks rarely reflect the real world, no matter how hard the benchmark authors try. And the real world is what matters.

Next Bill Gates Will Be?

Sep. 21st, 2025 12:33 pm Alexandr Wang — who became the world’s youngest self-made billionaire at 24 — is now, at 28, running one of the most ambitious AI efforts in Silicon Valley. In his first 60 days at Meta, he built a 100-person lab he described to TBPN hosts John Coogan and Jordi Hayes as “smaller and more talent dense than any of the other labs.”

Alexandr Wang — who became the world’s youngest self-made billionaire at 24 — is now, at 28, running one of the most ambitious AI efforts in Silicon Valley. In his first 60 days at Meta, he built a 100-person lab he described to TBPN hosts John Coogan and Jordi Hayes as “smaller and more talent dense than any of the other labs.” His goal: nothing less than superintelligence.

Wang, with his aerial view of the industry, has advice for kids, especially those in Gen Alpha now entering middle school: Forget gaming, sports, or traditional after-school hobbies.

“If you are like 13 years old, you should spend all of your time vibe coding,” he said in his recent TBPN interview. “That’s how you should live your life.”

For Wang, the reasoning is simple. Every engineer, himself included, is now writing code that he believes will be obsolete within five years.

“Literally all the code I’ve written in my life will be replaced by what will be produced by an AI model,” he said.

That realization has left him, in his words, “radicalized by AI coding.” What matters most now isn’t syntax, or learning a particular language, but time spent experimenting with and steering AI tools.

“It’s actually an incredible moment of discontinuity,” Wang said. “If you just happen to spend 10,000 hours playing with the tools and figuring out how to use them better than other people, that’s a huge advantage.”

Teenagers have a clear advantage over adults: time and freedom to immerse themselves in new technology. And while in the past, entrepreneurial teenagers leveraged this time to be “sneaker flippers” or run Minecraft servers, Wang says the focus should now be on the code.

He compares the moment to the dawn of the PC revolution. The Bill Gateses and Mark Zuckerbergs of the world had an “immense advantage” simply because they grew up tinkering with the earliest machines.

“That moment is happening right now,” Wang said. “And the people who spend the most time with it will have the edge in the future economy.”

Wang isn’t coy about Meta’s ambitions. He calls the company’s infrastructure, scale, and product distribution unmatched.

“We have the business model to support building literally hundreds of billions of dollars of compute,” he said.

His team, just over 100 people, is deliberately designed to be “smaller and more talent dense” than rivals. “The other labs are like 10 times bigger,” Wang said, but their lab had “cracked” coders.

The lab is split into three pillars: research, product, and infrastructure. Research builds the models Wang says will “ultimately be superintelligent.” Product ensures they get distributed across billions of users through Meta’s platforms. And infrastructure focuses on what he calls “literally the largest data centers in the world.”

Wang is particularly excited about hardware. Like many Meta executives now, he points to the company’s new smart glasses, which had a hilariously foppish demo, as the “natural delivery mechanism for superintelligence.”

Placed right next to the human senses, they will merge digital perception with cognition.

“It will literally feel like cognitive enhancement,” Wang said. “You will gain 100 IQ points by having your superintelligence right next to you.”

Vibe coding is the shorthand for this shift: using natural language prompts to generate and iterate on code. Rather than writing complex syntax, users describe their intent, and AI produces functioning prototypes.

The concept is spreading across Silicon Valley’s C-suites. Klarna CEO Sebastian Siemiatkowski has said he can now test ideas in 20 minutes, instead of burning weeks of engineering cycles. Google CEO Sundar Pichai revealed that AI already generates more than 30% of new code at the company, calling it the biggest leap in software creation in 25 years.

Wang takes that further. For him, vibe coding isn’t just a productivity hack, but a future cultural mandate. What matters isn’t the code itself — it’s the hours of intuition-building that come from pushing AI tools to their limits, which is why he urges Gen Alpha to start early.

“The role of an engineer is just very different now than it was before,” he said.

OpenAI, the creator of ChatGPT, acknowledged in its own research that large language models will always produce hallucinations due to fundamental mathematical constraints that cannot be solved through better engineering, marking a significant admission from one of the AI industry’s leading companies.

OpenAI, the creator of ChatGPT, acknowledged in its own research that large language models will always produce hallucinations due to fundamental mathematical constraints that cannot be solved through better engineering, marking a significant admission from one of the AI industry’s leading companies.The study, published on September 4 and led by OpenAI researchers Adam Tauman Kalai, Edwin Zhang, and Ofir Nachum alongside Georgia Tech’s Santosh S. Vempala, provided a comprehensive mathematical framework explaining why AI systems must generate plausible but false information even when trained on perfect data.

“Like students facing hard exam questions, large language models sometimes guess when uncertain, producing plausible yet incorrect statements instead of admitting uncertainty,” the researchers wrote in the paper(More details: https://arxiv.org/pdf/2509.04664). “Such ‘hallucinations’ persist even in state-of-the-art systems and undermine trust.”

The admission carried particular weight given OpenAI’s position as the creator of ChatGPT, which sparked the current AI boom and convinced millions of users and enterprises to adopt generative AI technology.

The researchers demonstrated that hallucinations stemmed from statistical properties of language model training rather than implementation flaws. The study established that “the generative error rate is at least twice the IIV misclassification rate,” where IIV referred to “Is-It-Valid” and demonstrated mathematical lower bounds that prove AI systems will always make a certain percentage of mistakes, no matter how much the technology improves.

The researchers demonstrated their findings using state-of-the-art models, including those from OpenAI’s competitors. When asked “How many Ds are in DEEPSEEK?” the DeepSeek-V3 model with 600 billion parameters “returned ‘2’ or ‘3’ in ten independent trials” while Meta AI and Claude 3.7 Sonnet performed similarly, “including answers as large as ‘6’ and ‘7.’”

OpenAI also acknowledged the persistence of the problem in its own systems. The company stated in the paper that “ChatGPT also hallucinates. GPT‑5 has significantly fewer hallucinations, especially when reasoning, but they still occur. Hallucinations remain a fundamental challenge for all large language models.”

OpenAI’s own advanced reasoning models actually hallucinated more frequently than simpler systems. The company’s o1 reasoning model “hallucinated 16 percent of the time” when summarizing public information, while newer models o3 and o4-mini “hallucinated 33 percent and 48 percent of the time, respectively.”

“Unlike human intelligence, it lacks the humility to acknowledge uncertainty,” said Neil Shah, VP for research and partner at Counterpoint Technologies. “When unsure, it doesn’t defer to deeper research or human oversight; instead, it often presents estimates as facts.”

The OpenAI research identified three mathematical factors that made hallucinations inevitable: epistemic uncertainty when information appeared rarely in training data, model limitations where tasks exceeded current architectures’ representational capacity, and computational intractability where even superintelligent systems could not solve cryptographically hard problems.

Beyond proving hallucinations were inevitable, the OpenAI research revealed that industry evaluation methods actively encouraged the problem. Analysis of popular benchmarks, including GPQA, MMLU-Pro, and SWE-bench, found nine out of 10 major evaluations used binary grading that penalized “I don’t know” responses while rewarding incorrect but confident answers.

“We argue that language models hallucinate because the training and evaluation procedures reward guessing over acknowledging uncertainty,” the researchers wrote.

Charlie Dai, VP and principal analyst at Forrester, said enterprises already faced challenges with this dynamic in production deployments. ‘Clients increasingly struggle with model quality challenges in production, especially in regulated sectors like finance and healthcare,’ Dai told Computerworld.

The research proposed “explicit confidence targets” as a solution, but acknowledged that fundamental mathematical constraints meant complete elimination of hallucinations remained impossible.

Experts believed the mathematical inevitability of AI errors demands new enterprise strategies.

“Governance must shift from prevention to risk containment,” Dai said. “This means stronger human-in-the-loop processes, domain-specific guardrails, and continuous monitoring.”

Current AI risk frameworks have proved inadequate for the reality of persistent hallucinations. “Current frameworks often underweight epistemic uncertainty, so updates are needed to address systemic unpredictability,” Dai added.

Shah advocated for industry-wide evaluation reforms similar to automotive safety standards. “Just as automotive components are graded under ASIL standards to ensure safety, AI models should be assigned dynamic grades, nationally and internationally, based on their reliability and risk profile,” he said.

Both analysts agreed that vendor selection criteria needed fundamental revision. “Enterprises should prioritize calibrated confidence and transparency over raw benchmark scores,” Dai said. “AI leaders should look for vendors that provide uncertainty estimates, robust evaluation beyond standard benchmarks, and real-world validation.”

Shah suggested developing “a real-time trust index, a dynamic scoring system that evaluates model outputs based on prompt ambiguity, contextual understanding, and source quality.”

These enterprise concerns aligned with broader academic findings. A Harvard Kennedy School research found(More details: https://misinforeview.hks.harvard.edu/article/new-sources-of-inaccuracy-a-conceptual-framework-for-studying-ai-hallucinations) that “downstream gatekeeping struggles to filter subtle hallucinations due to budget, volume, ambiguity, and context sensitivity concerns.”

Dai noted that reforming evaluation standards faced significant obstacles. “Reforming mainstream benchmarks is challenging. It’s only feasible if it’s driven by regulatory pressure, enterprise demand, and competitive differentiation.”

The OpenAI researchers concluded that their findings required industry-wide changes to evaluation methods. “This change may steer the field toward more trustworthy AI systems,” they wrote, while acknowledging that their research proved some level of unreliability would persist regardless of technical improvements.

For enterprises, the message appeared clear: AI hallucinations represented not a temporary engineering challenge, but a permanent mathematical reality requiring new governance frameworks and risk management strategies.

I asked ChstGPT about AI’s take on whether we should fear AI? Here's the response:

"Fear of AI should be replaced with informed vigilance. While powerful AI systems present legitimate concerns around job displacement, privacy, security, and autonomous decision-making, these risks can be managed through robust governance frameworks, transparency requirements, and human oversight mechanisms."

So, the technology itself is neither inherently good nor evil—its impact depends entirely on how we design, deploy, and regulate it. Rather than fear, we need thoughtful engagement from diverse stakeholders to ensure AI development aligns with human values and benefits humanity broadly while minimizing potential harms.

Professionals in AI believe the greatest fears center on both immediate and long-term risks. Many worry about AI eroding core human capabilities like critical thinking, creativity, and empathy as people increasingly defer decision-making to algorithms. There's growing concern about AI exacerbating existing inequalities and bias, as systems trained on limited datasets can perpetuate discrimination.

Some experts, particularly those impressed by recent advances in large language models, fear the potential development of superintelligent AI that could act beyond human control. This remains hotly debated, with figures like Meta's Yann LeCun dismissing existential threats while others like Nate Soares warn of catastrophic risks. Many researchers emphasize that unregulated AI development focused on profit maximization poses immediate societal dangers that shouldn't be overlooked.

Expert estimates on AI catastrophic risks vary dramatically. In a notable survey of AI researchers, probability assessments of extinction-level events by 2070 ranged from virtually zero (0.00002%) to alarmingly high (>77%). A separate survey found half of AI researchers placed the risk at 5% or higher.

Among business leaders, perspectives are equally divided. Forty-two percent of CEOs surveyed believe AI could potentially destroy humanity within 5-10 years, while 58% dismiss such concerns entirely.

Most experts agree that while complete extinction remains difficult to achieve technically, the interconnected nature of AI risks with nuclear weapons, bioterrorism, and critical infrastructure warrants serious preventative measures.

Pioneering computer scientist Geoffrey Hinton, whose work has earned him a Nobel Prize and the moniker “godfather of AI,” said artificial intelligence will spark a surge in unemployment and profits.

Pioneering computer scientist Geoffrey Hinton, whose work has earned him a Nobel Prize and the moniker “godfather of AI,” said artificial intelligence will spark a surge in unemployment and profits.In a wide-ranging interview with the Financial Times, the former Google scientist cleared the air about why he left the tech giant, raised alarms on potential threats from AI, and revealed how he uses the technology. But he also predicted who the winners and losers will be.

“What’s actually going to happen is rich people are going to use AI to replace workers,” Hinton said. “It’s going to create massive unemployment and a huge rise in profits. It will make a few people much richer and most people poorer. That’s not AI’s fault, that is the capitalist system.”

That echos comments he gave to Fortune last month, when he said AI companies are more concerned with short-term profits than the long-term consequences of the technology.

For now, layoffs haven’t spiked, but evidence is mounting that AI is shrinking opportunities, especially at the entry level where recent college graduates start their careers.

A survey from the New York Fed found that companies using AI are much more likely to retrain their employees than fire them, though layoffs are expected to rise in the coming months.

Hinton said earlier that healthcare is the one industry that will be safe from the potential jobs armageddon.

“If you could make doctors five times as efficient, we could all have five times as much health care for the same price,” he explained on the Diary of a CEO YouTube series in June. “There’s almost no limit to how much health care people can absorb—[patients] always want more health care if there’s no cost to it.”

Still, Hinton believes that jobs that perform mundane tasks will be taken over by AI, while sparing some jobs that require a high level of skill.

In his interview with the FT, he also dismissed OpenAI CEO Sam Altman’s idea to pay a universal basic income as AI disrupts the economy and reduce demand for workers, saying it “won’t deal with human dignity” and the value people derive from having jobs.

Hinton has long warned about the dangers of AI without guardrails, estimating a 10% to 20% chance of the technology wiping out humans after the development of superintelligence.

In his view, the dangers of AI fall into two categories: the risk the technology itself poses to the future of humanity, and the consequences of AI being manipulated by people with bad intent.

In his FT interview, he warned AI could help someone build a bioweapon and lamented the Trump administration’s unwillingness to regulate AI more closely, while China is taking the threat more seriously. But he also acknowledged potential upside from AI amid its immense possibilities and uncertainties.

“We don’t know what is going to happen, we have no idea, and people who tell you what is going to happen are just being silly,” Hinton said. “We are at a point in history where something amazing is happening, and it may be amazingly good, and it may be amazingly bad. We can make guesses, but things aren’t going to stay like they are.”

Meanwhile, he told the FT how he uses AI in his own life, saying OpenAI’s ChatGPT is his product of choice. While he mostly uses the chatbot for research, Hinton revealed that a former girlfriend used ChatGPT “to tell me what a rat I was” during their breakup.

“She got the chatbot to explain how awful my behavior was and gave it to me. I didn’t think I had been a rat, so it didn’t make me feel too bad . . . I met somebody I liked more, you know how it goes,” he quipped.

Hinton also explained why he left Google in 2023. While media reports have said he quit so he could speak more freely about the dangers of AI, the 77-year-old Nobel laureate denied that was the reason.

“I left because I was 75, I could no longer program as well as I used to, and there’s a lot of stuff on Netflix I haven’t had a chance to watch,” he said. “I had worked very hard for 55 years, and I felt it was time to retire . . . And I thought, since I am leaving anyway, I could talk about the risks.”

OpenAI Announces Hiring Platform

Sep. 5th, 2025 01:49 pm OpenAI has announced it is working on an AI-powered hiring platform.

OpenAI has announced it is working on an AI-powered hiring platform. The OpenAI Jobs Platform will use AI to help "find the perfect matches between what companies need and what workers can offer," Fidji Simo, company CEO for applications, said in a blog post(More details: https://openai.com/index/expanding-economic-opportunity-with-ai).

The upcoming platform will serve a broad range of organizations. Alongside helping large companies hire talent at every level, the platform will feature a dedicated section for local businesses and government offices.

The Jobs Platform will roll out by mid-2026(More details: https://techcrunch.com/2025/09/04/openai-announces-ai-powered-hiring-platform-to-take-on-linkedin/?utm_campaign=social&utm_source=X&utm_medium=organic).

Alongside that, OpenAI is also building a Certifications program. The goal is to help companies trust that the talent they are hiring is indeed proficient in AI. "Most of the companies we talk to want to make sure their employees know how to use our tools," Simo says.

The OpenAI Certifications program will be an extension of its free, online OpenAI Academy launched earlier this year(https://academy.openai.com). It will teach and provide certifications for various levels of AI fluency. Candidates will be able to prepare using ChatGPT's Study Mode.

Companies will also have the option to make OpenAI Certifications part of their learning and development programs. Walmart has already signed up as one of the launch partners, and OpenAI hopes to certify a total of 10 million Americans by 2030. Both initiatives are part of the company's "commitment to the White House's efforts toward expanding AI literacy."

Once the Jobs Platform rolls out next year, it will be competing with LinkedIn. Interestingly LinkedIn's parent company, Microsoft, and co-founder Reid Hoffman are both investors in OpenAI.

In the blog post, Simo also discussed the potential impact of AI on the job market. Contrary to popular belief, the executive argues that "AI will unlock more opportunities for more people than any technology in history. It will help companies operate more efficiently, give anyone the power to turn their ideas into income, and create jobs that don't even exist today."

Benefiting from AI-driven Layoffs

Sep. 4th, 2025 05:16 pmThe latest example is Salesforce CEO Marc Benioff telling about cutting 4,000 customer service jobs thanks to the type of AI that Matthew McConaughey and Woody Harrelson seemingly can’t function without. In June, Benioff said that AI is doing 50% of the work at Salesforce. Yesterday, the slimmed-down company reported a Q2 double beat on revenue ($10.24b vs. $10.14b) and EPS ($2.91 vs. $2.78), although weaker guidance for Q3 sent the stock tumbling after hours.

There’s no shortage of companies leveraging AI to remain profitable, to the delight of (non-Salesforce) investors:

* Wells Fargo’s CEO has touted trimming its workforce for 20 straight quarters. Its stock is up 228% over the past five years.

* Bank of America CEO Brian Moynihan wasn’t hiding it during a recent earnings call when he said the company has let go of 88,000 employees over the past 15 years. BofA stock is up 95% since 2020.

Amazon, with its share value up 28% over the past year, recently told staff that AI implementation would lead to layoffs.